Automatically Building and Deploying Jekyll on AWS with CodePipeline

In our last post we covered how to use S3 and CloudFront to host your Jekyll site on AWS statically, which keeps it fast and very inexpensive to operate. In this post, we will cover how to set up CodePipeline and CodeBuild to automatically rebuild the site and publish it to the web whenever you commit a change to your CodeCommit repository. When you’ve hooked up your website in this way, you will never want to go back to the old way: you can just concentrate on adding content and designing your site without having to concern yourself with how it actually gets live on the web. It’s a wonderful way to work.

Building the Jekyll Site Automatically

Whenever we make a change to our Jekyll site – adding a blog post, making

a change to the theme, whatever – we need to rebuild it using the command

jekyll build. To do this on AWS, we use the CodeBuild

service. We’ll only pay for the compute power in the cloud we use as we use it, by

the second, so it’s a very cost effective solution.

Create a new file in your infra directory called codebuild.yml.

If you’ve been following along, you’ll recognize in the CloudFormation

template below some variables that the Serverless Framework will fill in for

us, for example ${self:custom.account} is our account number.

Here’s the file:

# The build project that runs buildspec.yml in the tree

Resources:

CodeBuildServiceRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- sts:AssumeRole

Principal:

Service:

- codebuild.amazonaws.com

CodeBuildServicePolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CodeBuildServicePolicy

Roles:

- !Ref CodeBuildServiceRole

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource: "arn:aws:logs:${self:provider.region}:${self:custom.account}:*"

- Effect: Allow

Action:

- codecommit:GitPull

Resource: "arn:aws:codecommit:${self:provider.region}:${self:custom.account}:${self:custom.repo}"

- Effect: Allow

Action:

- s3:GetObject

- s3:GetObjectVersion

- s3:PutObject

Resource:

- "arn:aws:s3:::${self:custom.s3Bucket}*"

- Effect: Allow

Action:

- cloudfront:CreateInvalidation

Resource: "*"

CodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Name: ${self:custom.project}-${self:custom.stage}

Description: Build for ${self:custom.subdomain}.${self:custom.domain}

ServiceRole: !GetAtt CodeBuildServiceRole.Arn

Artifacts:

Type: CODEPIPELINE

Environment:

Type: linuxContainer

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/amazonlinux2-x86_64-standard:3.0

EnvironmentVariables:

- Name: CLOUDFRONT_DISTRO_ID

Value: ${self:custom.cloudfront_id}

- Name: S3_BUCKET

Value: ${self:custom.s3Bucket}

LogsConfig:

CloudWatchLogs:

Status: ENABLED

GroupName: /build/${self:custom.project}-${self:custom.stage}

Source:

Type: CODEPIPELINE

TimeoutInMinutes: 60It’s a pretty long file, it’s true, but there’s nothing complicated here.

The first two stanzas are policies that give the CodeBuild service the privileges it needs to do its work: the ability to write to CloudWatch logs, to pull source code from our git repo that we set up last time, and the ability to copy the created HTML files (the actual website) to the static S3 bucket that houses them.

Then the CodeBuildProject resource is the CodeBuild project itself, which tells

AWS what kind of EC2 instance we need to do the build, and where it should be

getting the source code from (CodePipeline; see below). Note that there are

two environment variables that we’re setting for the instance: S3 bucket

(where the static HTML files that Jekyll builds should go) and the CloudFront

Distribution that’s fronting it.

Triggering the Build on a Code Commit

Now we want to set up our CodePipeline,

which will automatically trigger that build whenever we push any changes to the

master branch of our repo.

Create a file codepipeline.yml in your infra directory with this content:

Resources:

CodePipelineBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: ${self:custom.s3Bucket}-codepipeline

PublicAccessBlockConfiguration:

BlockPublicAcls : true

BlockPublicPolicy : true

IgnorePublicAcls : true

RestrictPublicBuckets : true

CodePipelineServiceRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- codepipeline.amazonaws.com

Action: "sts:AssumeRole"

Path: /

Policies:

- PolicyName: CodePipelineServicePolicy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "codecommit:CancelUploadArchive"

- "codecommit:GetBranch"

- "codecommit:GetCommit"

- "codecommit:GetUploadArchiveStatus"

- "codecommit:UploadArchive"

Resource: "arn:aws:codecommit:${self:provider.region}:${self:custom.account}:${self:custom.repo}"

- Effect: Allow

Action:

- "codebuild:BatchGetBuilds"

- "codebuild:StartBuild"

Resource: "*"

- Effect: Allow

Action:

- "s3:*"

Resource: "arn:aws:s3:::${self:custom.s3Bucket}-codepipeline/*"

AmazonCloudWatchEventRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- events.amazonaws.com

Action: "sts:AssumeRole"

Path: /

Policies:

- PolicyName: CodePipelineExecutionPolicy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action: "codepipeline:StartPipelineExecution"

Resource: "arn:aws:codepipeline:${self:provider.region}:${self:custom.account}:${self:custom.project}-${self:custom.stage}"

AmazonCloudWatchEventRule:

Type: "AWS::Events::Rule"

Properties:

EventPattern:

source:

- aws.codecommit

detail-type:

- CodeCommit Repository State Change

resources:

- "arn:aws:codecommit:${self:provider.region}:${self:custom.account}:${self:custom.repo}"

detail:

event:

- referenceCreated

- referenceUpdated

referenceType:

- branch

referenceName:

- master

Targets:

- Arn: "arn:aws:codepipeline:${self:provider.region}:${self:custom.account}:${self:custom.project}-${self:custom.stage}"

RoleArn: !GetAtt

- AmazonCloudWatchEventRole

- Arn

Id: codepipeline-AppPipeline

AppPipeline:

Type: "AWS::CodePipeline::Pipeline"

Properties:

Name: ${self:custom.project}-${self:custom.stage}

RoleArn: !GetAtt

- CodePipelineServiceRole

- Arn

Stages:

- Name: Source

Actions:

- Name: SourceAction

RunOrder: 1

ActionTypeId:

Category: Source

Owner: AWS

Version: 1

Provider: CodeCommit

Configuration:

BranchName: master

RepositoryName: ${self:custom.repo}

PollForSourceChanges: false

OutputArtifacts:

- Name: SourceArtifact

- Name: Build

Actions:

- Name: BuildAction

RunOrder: 2

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

InputArtifacts:

- Name: SourceArtifact

OutputArtifacts:

- Name: BuildArtifact

Configuration:

ProjectName: ${self:custom.project}-${self:custom.stage}

ArtifactStore:

Type: S3

Location: ${self:custom.s3Bucket}-codepipelineThis is another long CloudFormation template, but again there’s nothing conceptually challenging here.

The first stanza just sets up an S3 bucket for the pipeline to place the

source code in, and from which CodeBuild picks it up automatically. We

configure that bucket to be private to us.

Then we set up a policy for the pipeline. It needs the ability to work with CodeCommit, our git repo; to start builds in CodeBuild; and of course to put source code into that S3 bucket we just created.

We also need to give CloudWatch the ability to trigger the builds themselves. Whenever a file is created or updated in the repo, that will kick off the pipeline itself.

Finally we have the AppPipeline pipeline itself. This sets up two distinct

phases: a Source phase, which does a full pull from git and which copies the

output into the code pipeline bucket. This is called the SourceArtifact. That

artifact is referenced as input in the second Build phase.

So, to sum up: whenever there’s a push done to the git repo, the Pipeline will kick in. It will do a full get on the source tree, put a copy of it in S3, and will then trigger the Build.

The BuildSpec File

But how does CodeBuild know how to build a Jekyll website? Well, we need a build specification file that tells CodeBuild what steps exactly it should take.

This is pretty simple: essentially, in the build phase we tell CodeBuild to take the source

code that was given to it by CodePipeline, and run Jekyll on it with the production

configuration settings. That will put the appropriate files in the _site directory

on the EC2 instance doing the build.

Then, in the post_build phase, copy the _site directory over to our S3 bucket for serving. Finally,

invalidate the CloudFront cache so that our reading public can get the latest and the

greatest right away.

If you’ve been following along, your buildspec.yml file should be at the root of your

git repo and should look like this:

version: 0.2

phases:

install:

commands:

- gem install jekyll bundler

- cd website

- bundle install

build:

commands:

- JEKYLL_ENV=production bundle exec jekyll build --config _config.yml,_config_production.yml

post_build:

commands:

- aws s3 sync _site s3://$S3_BUCKET

- aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRO_ID --paths "/*"Note that the build is getting the S3_BUCKET and the CLOUDFRONT_DISTRO_ID as environment

variables. Those were placed there by CodeBuild, in the EnvironmentVariables section.

Revisiting the Serverless Framework Configuration

As we discussed last time, the serverless.yml file includes the configuration

settings to make all of this work properly. We need to make a few adjusments

so that when we deploy our infrastructure stack, everything is hooked up properly.

Here’s how ours looks now:

service: jekyll-web

custom:

project: jekyll-web

account: '[YOUR ACCOUNT ID]'

certificate: [CERTIFICATE ID]

domain: [YOUR DOMAIN]

sslCertArn: arn:aws:acm:us-east-1:${self:custom.account}:certificate/${self:custom.certificate}

stage: ${opt:stage, self:provider.stage}

subdomain: ${self:provider.environment.${self:custom.stage}_subdomain}

s3Bucket: jekyll-web-${self:custom.stage}

cloudfront_id: ${self:provider.environment.${self:custom.stage}_cloudfront_id}

repo: [YOUR REPO NAME]

provider:

name: aws

stage: prod

region: [YOUR PREFERRED AWS REGION]

environment:

dev_subdomain: dev

dev_cloudfront_id:

prod_subdomain: www

prod_cloudfront_id: [YOUR CLOUDFRONT ID]

stackTags:

Project: Corporate

resources:

- ${file(s3.yml)}

- ${file(cloudfront.yml)}

- ${file(codebuild.yml)}

- ${file(codepipeline.yml)}We’ve added our CodeBuild and CodePipeline infrastructure pieces to the bottom, and we’ve added some custom variables that help us parameterize the scripts so we can use them for other purposes.

Note that the Certificate Arn and the CloudFront ID have to be manually added to the

serverless.yml file for this to work. That’s kind of lame, I admit – but whatever. It works.

Add the Files to Git

OK, we have a number of new files that we’ve added. If you’re doing it exactly the same way we are, your file tree should look like this:

.

├── buildspec.yml

├── infra

│ ├── cloudfront.yml

│ ├── codebuild.yml

│ ├── codepipeline.yml

│ ├── s3.yml

│ └── serverless.yml

├── README.md

└── website

├── 404.html

...

Be sure to add all these files to your git repo.

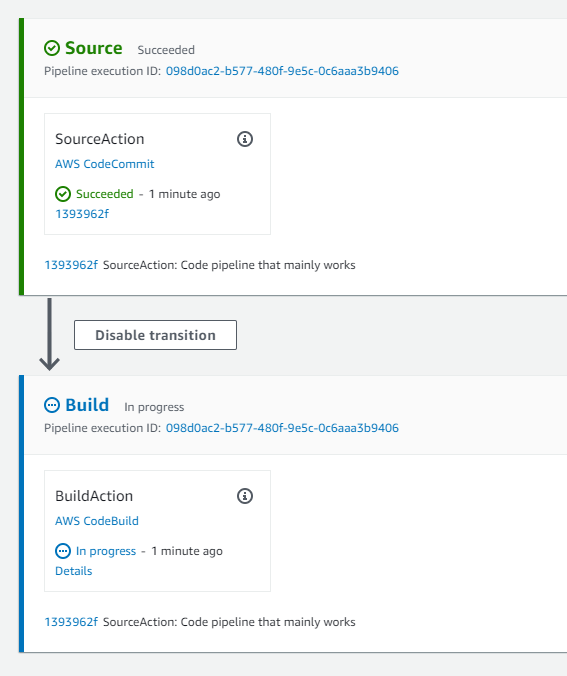

As soon as you push your commit to the git repo, CodePipeline will recognize that there’s been a change to the tree and will spring into action. If you log into your AWS console and navigate to your new CodePipeline, you should see something like this:

Deploying and Triggering the Build

Deploy all that infrastructure using the Serverless Framework:

$ sls deploy

It will take some time for Serverless to build the CodeBuild and CodePipeline infrastructure elements in AWS.

To test it you can make a trivial change to your Jekyll

site – change the site description in the _config.yml file, for example – and push

it up to git. You should be able to watch CodePipeline start processing.

Conclusion: What Have we Wrought?

We’ve done a lot in this three-step blog series, so it’s worth reviewing.

First, we set up a Jekyll development environment fully in the cloud: we have a Cloud9 environment as our Jekyll IDE, for which we only pay when we are actually using it. The source of our Jekyll site is safely stored in a fully managed git repo in CodeCommit. When we make changes to our Jekyll site and commit those changes to git, a pipeline springs into being and builds static HTML files for the site automatically. These files are then automatically copied over to an S3 bucket, from which our site is securely served behind an SSL certificate. And these HTML files are distributed by CloudFront, a Content Delivery Network, for extremely speedy rendering no matter where in the world your readers are.

Not only does that save a ton of time over the long run, but it does it extremely cheaply and reliably.

We hope this blog series has been useful to you.